The Ultimate Ecommerce A/B Testing Guide

By Amelia Woolard

01/04/2024

In today's highly competitive digital landscape, A/B testing has become essential for businesses striving to gain a winning edge.

This rings particularly true in the ecommerce industry, where every click and conversion matters. With so much potential revenue riding on customer experience, A/B testing is now a critical tool for ecommerce brands seeking to customize their offerings to meet their customers' (and potential customers') specific needs.

If you're curious about A/B testing and how it can strengthen your ecommerce store, you’ve come to the right place. In this guide, we’ll break down everything you need to know about A/B testing for ecommerce brands, including the basic terminology associated with A/B testing, its benefits, and practical applications. You’ll even meet a couple of brands that are reaching new heights with the insights taken from A/B tests.

- A/B testing is used to compare at least two variations of a webpage, product listing, marketing campaign, and more.

- A/B testing is crucial for improving the customer experience, informing data-driven decisions, helping with SEO on your ecommerce website, and boosting conversion rate.

- To run an effective A/B test, you’ll want to identify the key elements you want to test, set the right sample size and duration, analyze results once the test is over, and then implement changes if necessary.

What Is an A/B Test?

If you've spent some time in the ecommerce industry, chances are you're familiar with the concept of A/B testing. However, you might still have lingering questions about how A/B testing works and its benefits.

So, let’s start from the ground up: What is an A/B test?

A/B or split testing is a technique used to compare at least two variations of a marketing component, like a webpage, product listing, checkout process, or email message.

To perform A/B testing, you must divide your target audience into at least two groups and present each group with a different variation. Then, you measure the results to find out which version performed the best. While goals can vary based on what is being tested, you’ll typically want to look for the version that yields higher engagement and conversions. No matter what your business goals are, those metrics will help you reach them.

What Are the Benefits of A/B Testing for Ecommerce?

When used properly, split testing helps brands keep their approach sharp and engaging, which helps them stay ahead in an increasingly competitive market.

There are plenty of good reasons to conduct an A/B test, but let's highlight four benefits that demonstrate the significance of testing your marketing campaigns:

Drives Data-Based Business Decisions

A/B testing is a valuable tool for businesses to make smart choices based on data. By comparing the test results of multiple versions of marketing campaigns to see which ones work best, A/B testing helps businesses find the most effective variations, improve their strategies, and create personalized offerings that enhance customer experiences.

A/B testing can also help mitigate risks related to any business changes or investments. With A/B testing, you can get a better idea of what to expect before you commit to your next campaign or major website update.

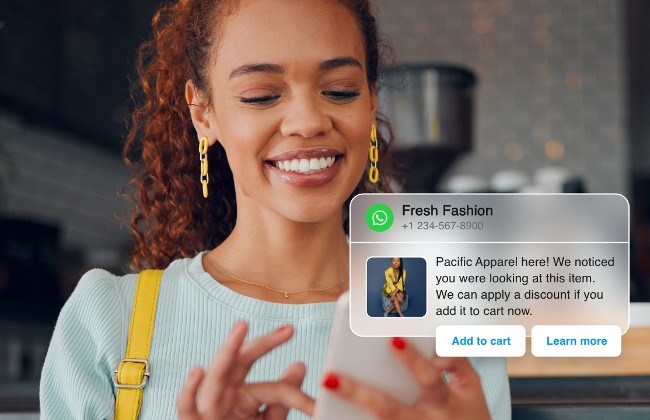

Improves the Customer Experience

Your customers have strong opinions on the marketing messages they receive. A/B testing makes it possible to test every step of your online shopping experience, from website layouts to checkout processes and what comes in between, so you can craft marketing messages that customers want to interact with.

A good A/B testing strategy allows your brand to identify and implement effective tactics that not only heighten engagement and conversion rates, but also enhance the overall customer experience. By leveraging customer data and personalized touchpoints like product recommendations, you can create a seamless journey that fosters customer satisfaction and loyalty (as well as more sales).

Helps With SEO

Did you know that leads generated by SEO have a 14.6% close rate? To turn casual viewers into paying customers, your SEO must be top-notch. That's why you need to include A/B testing in your marketing arsenal.

When you test meta tags, page titles, headings, URL structures, and keyword placements, you end up with the most effective optimizations for search engine rankings. A/B testing also gives ecommerce brands space to experiment with different SEO strategies, so they can adopt the strategies that work and leave behind those that do not.

Facilitates Marketing Strategy

Still dreaming about your ultimate marketing campaign? The one that scores a record number of conversions and earns you extra brownie points with your boss? A/B testing can make this dream a reality.

When you’re testing ad copy, email subject lines, visuals, call-to-action buttons, and more, you learn which elements resonate with your audience. With all of these learnings in tow, your business can allocate its marketing resources effectively, leading to impactful messaging, increased conversions and engagement, and ultimately, greater success. Plus, A/B testing encourages ongoing improvement so you can keep your strategy effective over the long run.

Where To Use A/B Testing for Ecommerce

In the fierce ecommerce world, optimizing every aspect of your online store is necessary to drive conversions and retain customers. From product pages to checkout processes, here are a few areas of an ecommerce site that are well-suited to A/B testing:

Call to Action (CTA)

Your message may be remarkable, but if you’re not drawing attention to your calls to action (CTAs), you won’t clinch the sale. That’s what makes CTAs such fertile ground for A/B testing. Everyone wants to know if their CTAs are getting any traction, and A/B testing can give you the answers you’re looking for.

A/B testing can optimize your CTAs by testing different elements such as wording, color, placement, size, design, or emojis. You'll also want to test it on different pages — does your CTA perform just as well on your product pages as your category pages or even your social media posts? By experimenting with different variations, you can determine which CTA generates the highest click-through rates and conversions.

Quick Product View

When you’re browsing items online, you may want to quickly view key details about a product without leaving the current page. In those instances, you’re likely using quick product view features.

A/B testing gives you the tools needed to optimize quick product views appropriately and discover which version leads to the desired user behavior (e.g., click the page and purchase the product). Consider testing different layouts, product detail placement, product videos, and image sizes to see which version is most successful.

Promotional Pop-Ups

Pop-ups earned a bad reputation in the early days of the internet. However, when done correctly, such as by implementing on-site weblayers, you can drive significant results.

When you A/B test your pop-ups, pay attention to the design, messaging, and incentives given to the customer for engaging with your ad. Don’t overlook the timing of your pop-ups or weblayers, either. If you overwhelm customers with them, you may be perceived as “spammy.” Choose the result that connects with your audience and minimizes friction.

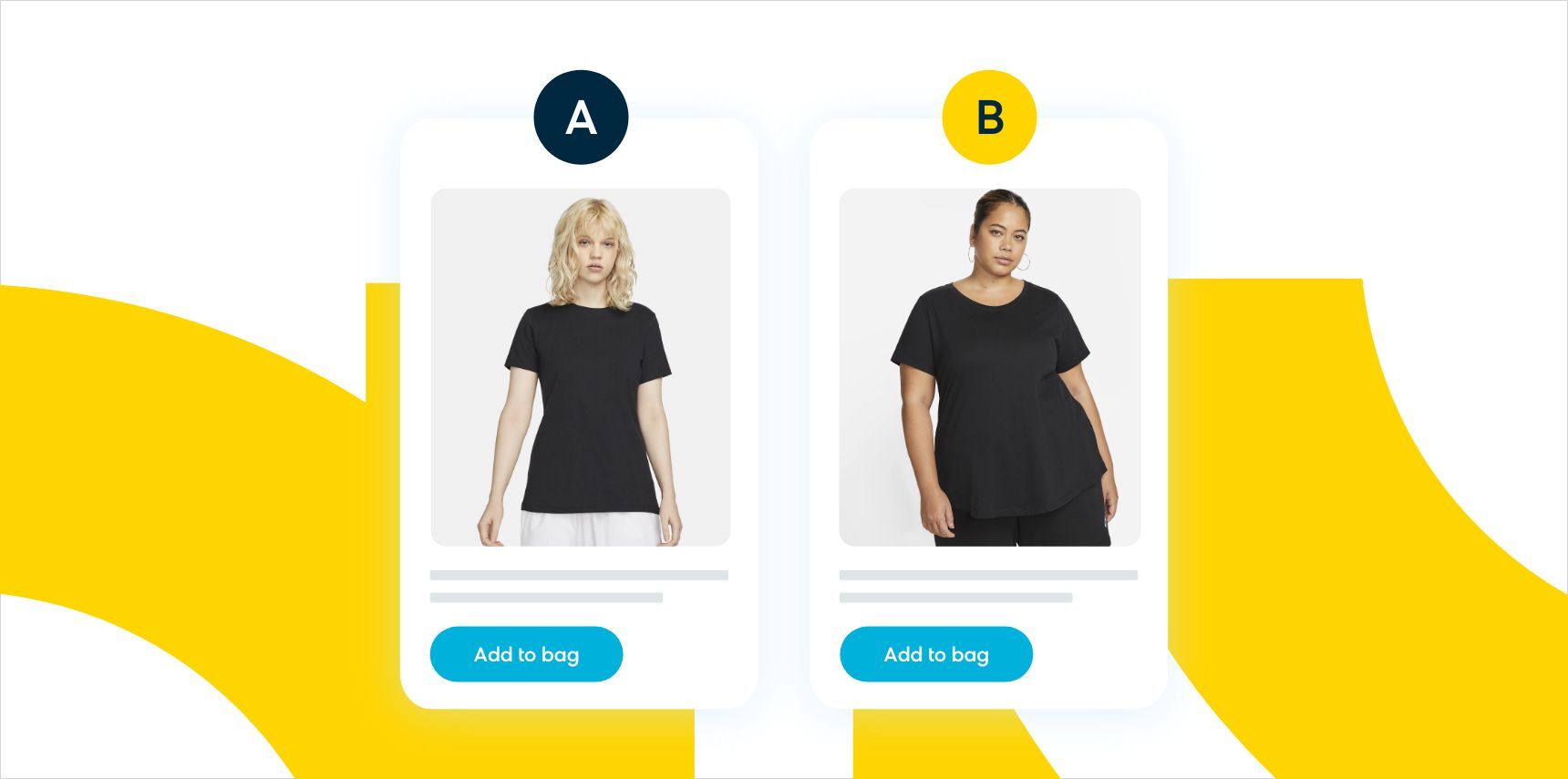

Product Imagery

Humans are visual creatures, and imagery is very important to us when we’re making purchases. Don’t overlook the importance of high-quality product images to show off your products and entice potential customers.

You may have never considered split testing your product photos, but it can help you pinpoint which images best sell your products. Image size, product angles, backgrounds, and the inclusion of lifestyle images are all up for debate with A/B testing. By comparing the performance of different image variations, you can determine which ones should be used on your website and digital ads.

Product Title and Copy

After your imagery, the second most important thing about your product listing is the title and copy. Not only does the product title and copy inform customers about what they’re buying, but this content is also crawled by search engines and used to rank your page appropriately on search engine results pages (SERPs). Better SERP visibility equals more customers, so this is important to get right.

Luckily, you can use A/B testing to test different headlines, descriptions, product features, and the tone of your copy. Identify the content combinations that provide the most compelling effect on customers, and watch your ecommerce sales take off.

Landing Pages

You may think of them as just another part of your website, but landing pages have the highest conversion rate of all signup forms at 23%. Conversions are even more likely if you A/B test your landing pages.

Headlines, images, layouts, CTAs, and other elements should all come under the microscope when testing these web pages. After analyzing metrics like conversion rates, bounce rates, and engagement, your team can zero in on which landing page performs better, improving your marketing success over time.

What Are Some A/B Testing Examples?

A/B testing is a great way to optimize digital experiences. Don’t take our word for it, though. Get the scoop on how two companies, with real A/B testing examples, have used Bloomreach’s powerful testing capabilities to refine their marketing strategies, improve website performance, and deliver personalized experiences that convert.

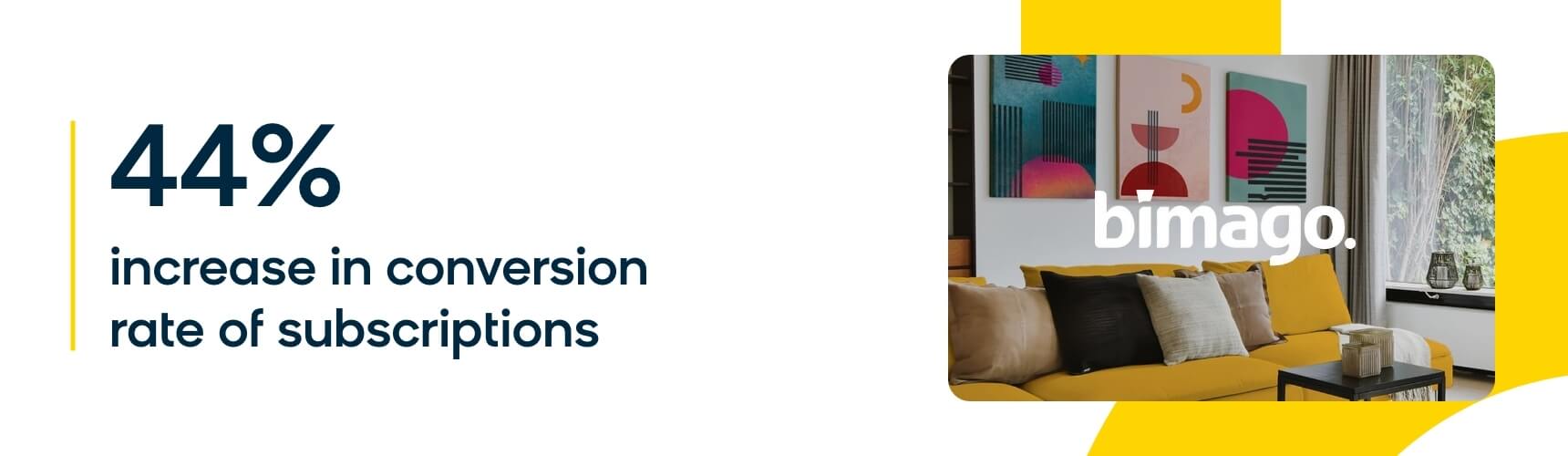

bimago

bimago is a leading retailer of interior design products, including art prints and textiles. To provide an industry-leading customer experience, bimago sought a way to test its marketing campaigns and optimize them over time. However, the company didn’t want just any A/B testing tool. bimago needed a solution that could cater to every customer, not just those counted among the winning majority of participants.

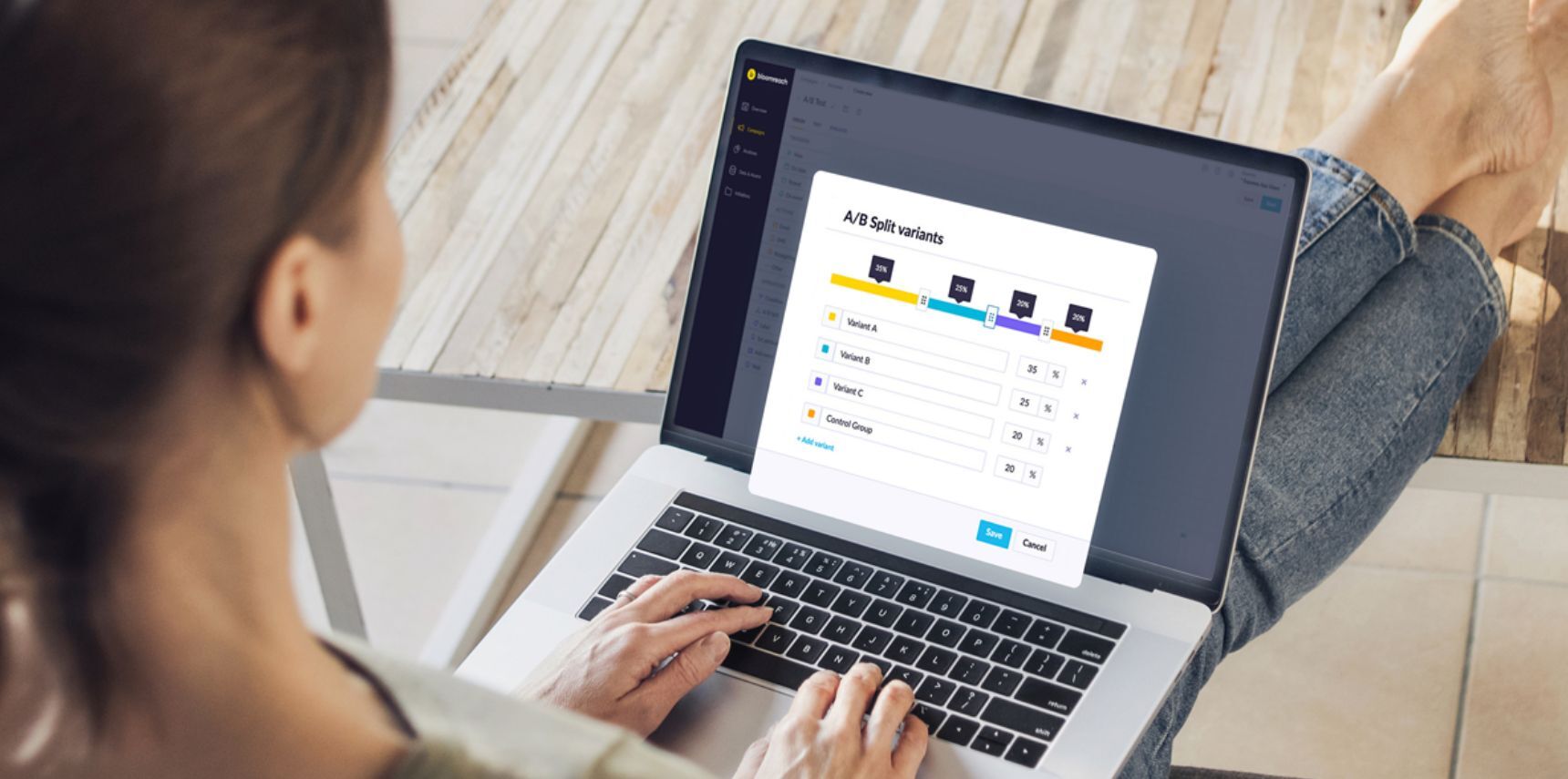

The company turned to Bloomreach Engagement for A/B testing software with built-in contextual personalization. Engagement uses artificial intelligence and machine learning to select the best variant for each website visitor, considering factors such as device, time of day, customer history, and brand affinity. Unlike traditional ecommerce testing, contextual personalization ensures that 100% of customers see the variant they prefer, eliminating dissatisfaction among a portion of the audience.

bimago aimed high with its split testing goals, and it paid off. With Bloomreach Engagement, bimago experienced a 44% jump in conversions compared to traditional A/B testing.

Whisker

Famous for creating the world’s first automated litter box in 2000, Whisker has grown to become a leading provider of connected pet products. Like many other ecommerce businesses, Whisker sought a simple way to personalize its touchpoints with customers and manage their preferences and other data in one place. The company also wanted to use A/B and multivariate testing tools to optimize its marketing messages.

Whisker deployed Bloomreach Engagement and soon began taking advantage of Engagement’s unique single customer view dashboard, which aggregates customer data and keeps it organized for easy retrieval. Then, Whisker used these newly organized data sources to perform ecommerce testing on its email campaigns. With Engagement on its side, Whisker saw a 107% increase in conversion rate for users that received persistent messaging.

How To Run an A/B Test

Executing an effective A/B test requires careful planning and methodical execution. By following a structured approach, you can gain valuable insights and make data-driven decisions to optimize your marketing strategy.

Determine Your Topic for Testing and a Goal

A successful A/B test starts with a predetermined topic and a clear goal. Ask yourself which part of your marketing strategy you’d like to optimize. Maybe you’d like to learn how much attention your customer is paying to your headline or you want to know the type of CTA most likely to drive clicks.

Once you have your test idea, get clear on your goals with the A/B test. Sure, you’re interested in which version performs the best, but what is your definition of success? Is it more traffic to your ecommerce site, higher conversions, more engagement, or greater click-through rates? Settle this and you’ll be off on the right foot with A/B testing.

Understanding Statistical Significance

When you perform A/B testing, it’s always possible that your results are due to chance. Because chance results won’t help you improve your ecommerce marketing, you’ll want to achieve statistically significant results when running your tests.

Statistical significance refers to the level of confidence in the results obtained from comparing two or more test variations. It helps determine whether your split test has generated reliable data or if it’s just a fluke.

To calculate statistical significance in A/B testing, you can use techniques such as t-tests or chi-square tests, which analyze the data collected from the control and variation groups.

The significance level, often set at 95% (p-value < 0.05), is used as a threshold to determine whether the observed differences are statistically significant or likely to have occurred by chance. A p-value below the significance level suggests that your results are statistically significant and should be treated as such.

Create a Hypothesis

Now that you know what you’re testing and what you hope to learn from it, it’s time to create a test hypothesis.

When creating a hypothesis, start by clearly defining the control and variation groups and the specific element or variation being tested. Then, articulate the expected impact or difference between the two versions. For example, if testing a new CTA button color, the hypothesis could be that changing the button from black to red will increase your click-through rate.

Use your reasoning skills, industry knowledge, and any other relevant data to develop a sound prediction that can be measured and potentially proven wrong. If your hypothesis is rooted in something immeasurable, you won’t be able to accurately weigh your testing results and come away with anything meaningful.

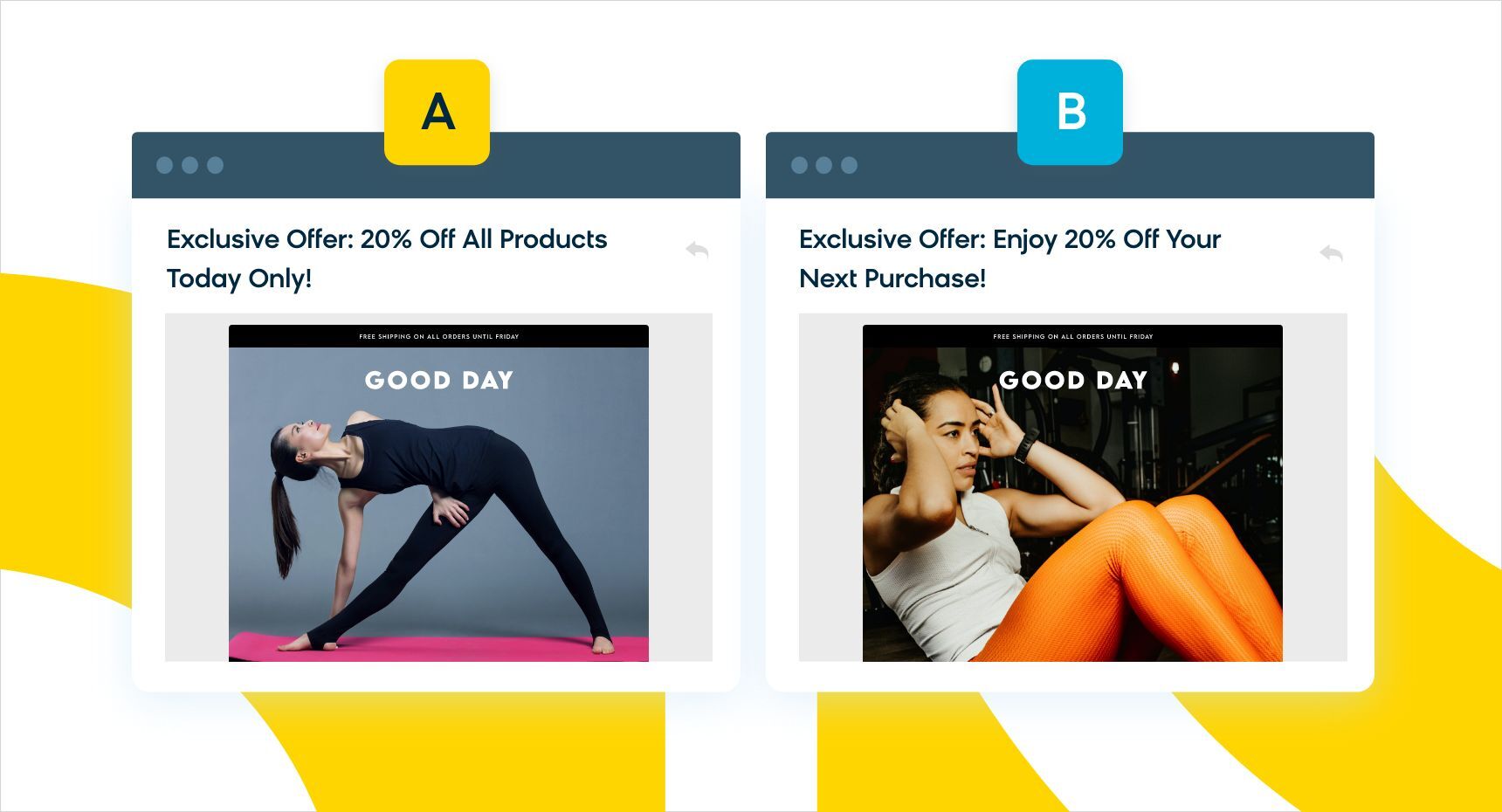

Create Variation 1 and Variation 2

Once you’ve determined the topic for A/B testing and created a hypothesis, the next step is to create your variations. These variations represent the different versions of your elements that will be tested against the existing version (or control element), which hasn’t been changed.

When creating variations, focus on changing one specific element at a time while keeping other aspects consistent. If you have multiple variables, it could dilute the results of the test. For example, if you’re testing an email subject line, version A could use emojis, while version B could mention the recipient's name. No matter how you differentiate each variation, be sure to isolate the changes so you can correctly assess how they influence the desired goal.

How To Calculate A/B Test Sample Size

When you accurately calculate your A/B testing sample size, you ensure that the test has sufficient statistical power to detect the differences between your variations and provide reliable insights. Here’s how to do it:

- First, determine the desired statistical power. This is typically set at 80% or higher and represents the likelihood of detecting a true difference (if it exists).

- Then, choose an acceptable level of significance, commonly set at 95%. This level helps you determine when a result is considered statistically significant.

- Finally, consider how big of a difference you expect to see and how much the data might vary. These factors help you choose the right sample size for your A/B test. You can use tools like a statistical significance calculator or formulas, such as the t-test or chi-square test, to determine the sample size that will give you reliable test results.

Set Up Your Test

Next, it’s time to start testing! Set up your A/B test by using your testing tool or platform to establish the duration of the test and the sample size needed to achieve statistical significance. For unbiased results, randomly assign your online shoppers the control and variation groups.

During the testing phase, it's important to closely monitor the data and metrics to track the progress of the test. Allow sufficient time for the test to run, considering factors such as website traffic volume and seasonality. For example, when running tests during a period of high traffic, like the holidays, be sure to consider that factor when comparing data from that period to data from a slower time of year. Ignore the seasonality of customers’ purchasing patterns and you’ll have misleading data.

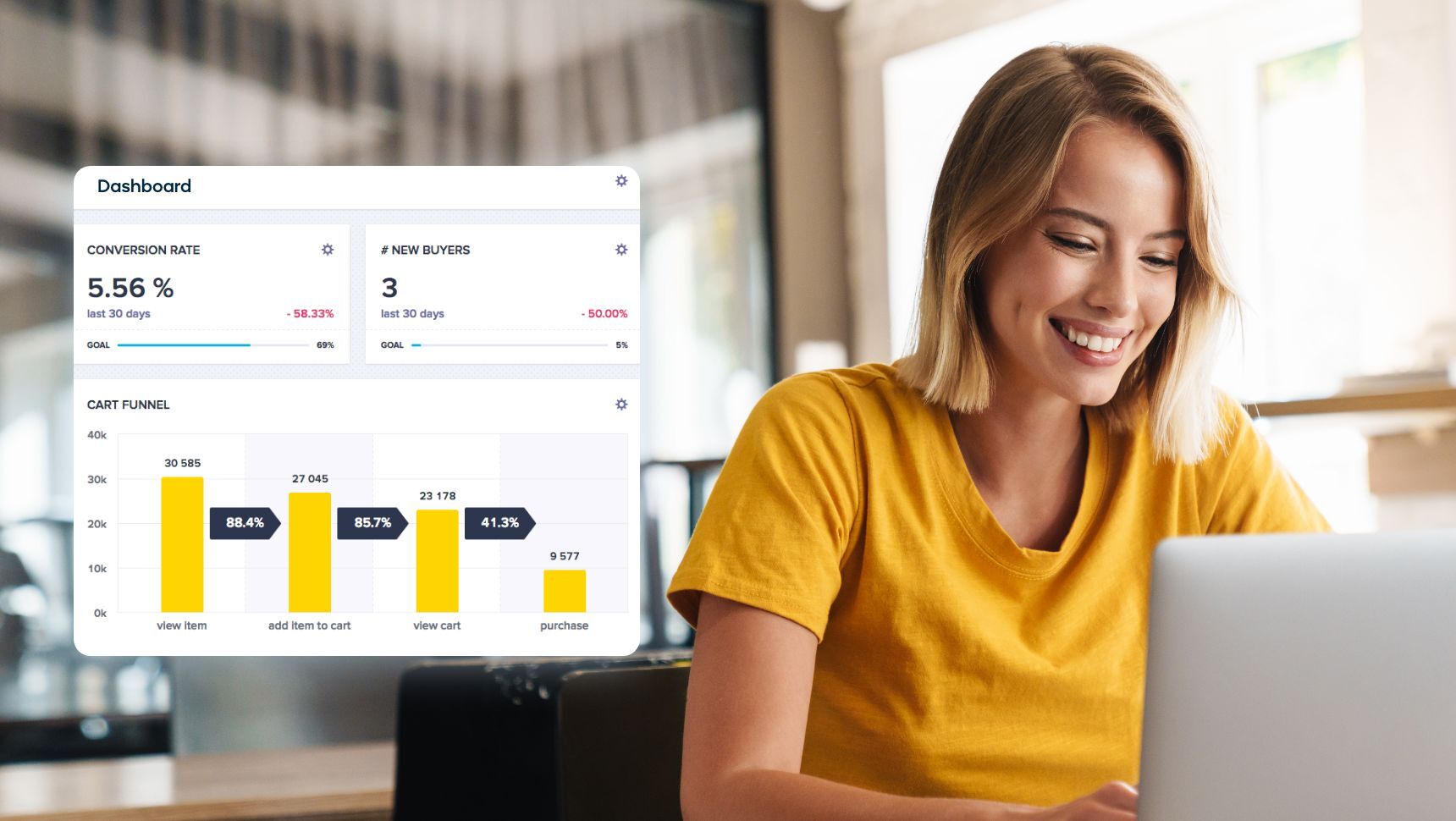

Analyze the Results

You’ve planned and executed your A/B or multivariate test to perfection. Now, it’s time to discover what exciting intel your testing process has in store for you.

Analyzing the results of your A/B tests is a crucial part of improving your marketing strategy (as well as future tests). Start by comparing the performance of the control and variation groups based on the predefined metrics and goals. After confirming the statistical significance of your results, examine your data for any noticeable patterns, trends, or significant variations that demonstrate how your changes have contributed to achieving your goal. Did you get your desired outcome? If the test succeeds, it’s a good sign to move on to other testing elements to further optimize your content.

How To Find Statistical Confidence

A/B tests are helpful, but sometimes, even the best-planned tests spit out data that isn’t reliable. Understanding statistical confidence will help you see the difference between useful and unhelpful A/B testing data.

Statistical confidence refers to the level of certainty in the accuracy and reliability of the results obtained from your test or study. To find statistical confidence in A/B testing, follow these steps:

- First, set up hypotheses: the null hypothesis (no significant difference) and the alternative hypothesis (a significant difference).

- Next, choose a level of significance (usually 0.05) to decide if the observed difference is meaningful.

- Collect data from both the control and experimental groups and use statistical tests like t-tests or chi-square tests to analyze the data.

- The test will give you a p-value, which shows the likelihood of getting the observed difference by chance. If the p-value is less than 0.05, you can safely reject the null hypothesis and conclude there's a significant difference in the results. The smaller the p-value, the more confident you can be in your results.

Remember, statistical confidence doesn't make your findings practically significant or valuable in the real world. Always consider both statistical and practical significance when interpreting the results of a split test.

Use the Results To Inform On-Site Changes

The best data in the world is meaningless if it’s unused. That’s why the final stage of any good A/B test involves applying your results to your marketing strategy.

By analyzing the data and identifying the versions of your A/B tests that performed better, you can make necessary adjustments to enhance user experience, amplify conversions, or attain specific objectives. For example, you might find out that more detailed product descriptions improve conversions or that customers prefer the free shipping variant of your email offer. Making those changes is justifiable when you have the data needed to confirm that making that change will improve performance.

Supercharge Your A/B Testing Strategy With Bloomreach

A/B testing is a powerful tool for optimizing ecommerce strategies. With A/B testing, your ecommerce business can unlock the potential to deliver personalized experiences, enhance customer satisfaction, and scale up in the ever-evolving digital marketplace.

For the best partner in your A/B testing journey, turn to an all-in-one solution like Bloomreach Engagement. Our cutting-edge platform streamlines A/B testing for you and provides out-of-the-box analytics and customer insights, so you can devote less time to setup and more time to running tests and getting the insights you need.

Bloomreach Engagement is also powered by Loomi, an AI built specifically for ecommerce, which opens up even more avenues for testing and conversion rate optimization. With AI-driven contextual personalization, you can serve up the best variant for every individual customer — not just the majority.

Learn more about how Bloomreach's unique approach to A/B testing helps ecommerce brands like yours outshine the competition.

Found this useful? Subscribe to our newsletter or share it.